Quantifying and Using Uncertainty in Deep Learning-based UAV Navigation

Quantifying and using uncertainty in Bayesian deep learning systems for robust UAV navigation

Introduction

Autnomous systems, like Unmanned Aerial Vehicles (UAVs) and self-driving cars, increasingly rely on Deep Neural Networks (DNNs) to handle critical functions within their navigation pipelines (perception, planning, and control). While DNNs are powerful, deploying them in safety-critical roles demands that they accurately express their confidence in predictions. This is where Bayesian Deep Learning (BDL)

In this post, we describe how to capture and use uncertainty along a navigation pipeline of BDL components. Moreover, we assess how uncertainty quantificaiton throughout the system impacts the navigation performance of an UAV that must fly autonomously through a set of gates dispoosed in a circle within a simulated environment (AirSim).

The Navigation Task and Architecture Overview

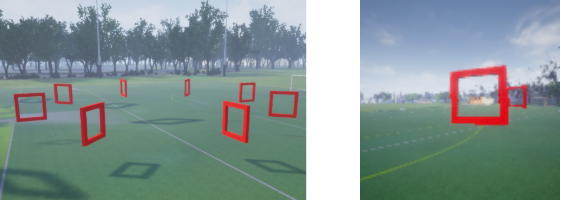

The goal of the autonomous agent (i.e., UAV) is to navigate through a set of gates with unknown locations disposed in a circular track in the AirSim simulator, as presented in Figure 1.

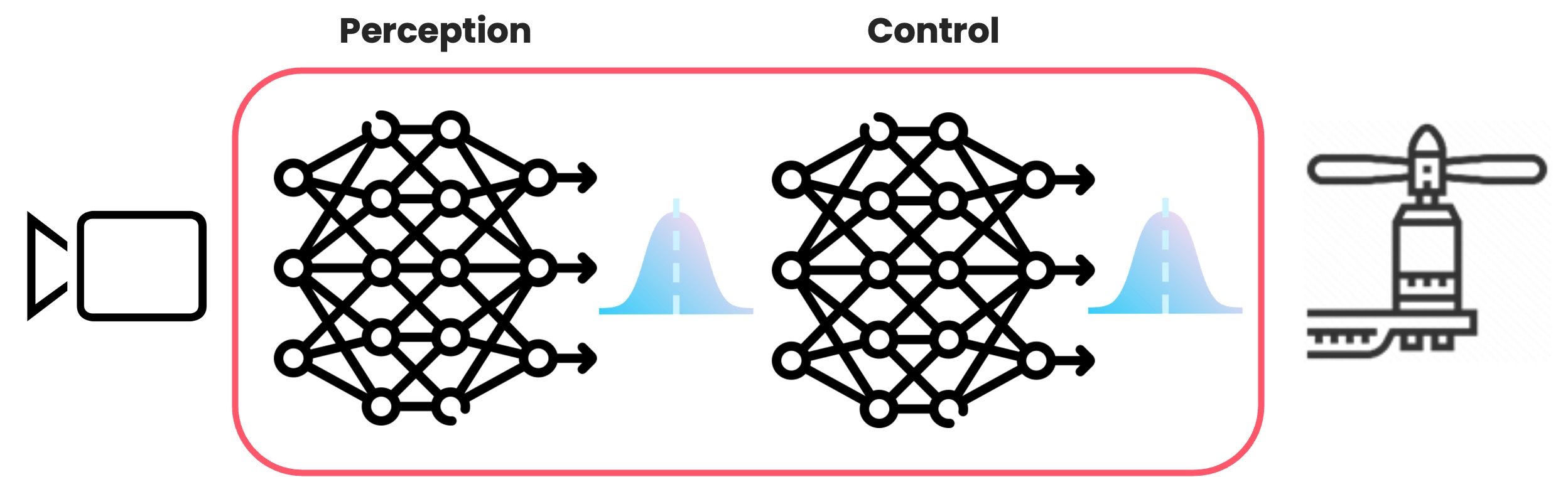

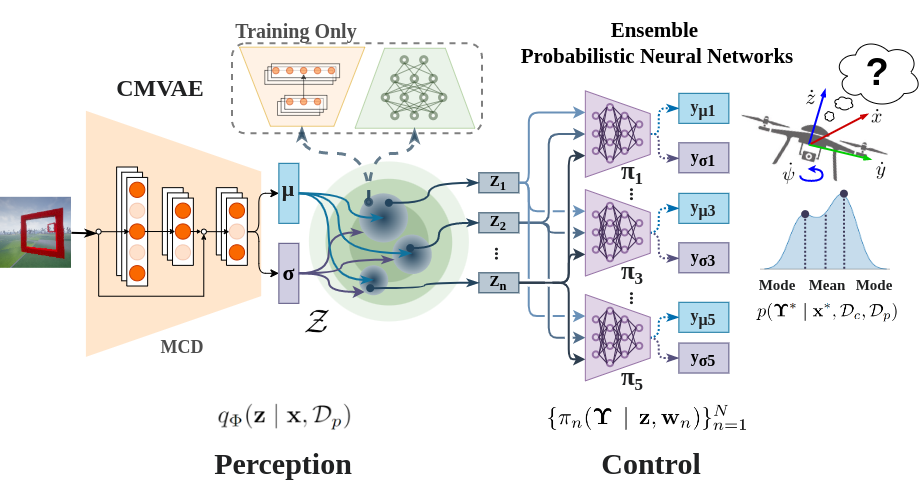

We consider a minimalistic end-to-end deep learning-based navigation architecture to study uncertainty propagation and its use. Therefore, in our experiments, the autonomus navigation architecture consists of two neural network components, one for perception and the other for control, as presented in Figure 2.

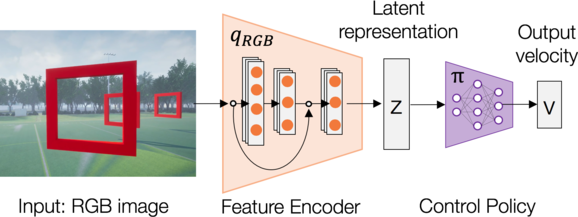

To create an instance of the architecture above, we can follow the approach presented in

Nevertheless, Bonatti et al.

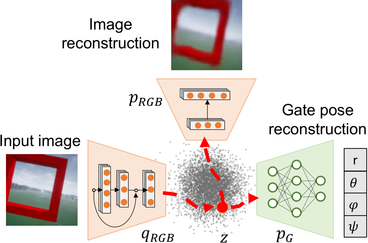

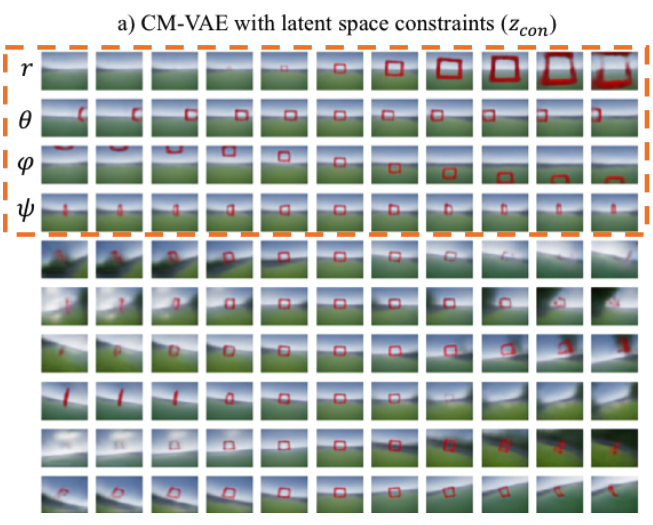

Moreover, the additional network block for predicting the gate poste, is connected in an usual way to the VAE. The regression block only uses the first four variables of the latent vector at the output of the CM-VAE encode. In addition, each of this 4 latent vector variables, is connected to a dedicated regressor for each of the predicted spherical coordinates (radius, polar, azimuth, yaw). This causes a stronger regularization to the latent space during traning since the first 4 variables of the latent space vector will receive an additional flow of error gradients corersponding to pose prediction errors, and therefore, forcing the disentanglement of this 4 latent vector variables, as presented in Figure 5.

Learning Perception Representations

To build the perception component, we use the Dronet architecture

Learning a Probabilistic Control Policy

Once the perception component is trained, we use only the encoder of the trained CM-VAE to get a rich compact representation (latent vector) of the input image. The downstream control task (control policy $\pi$) uses a MLP network to operate on the latent vectors $z$ at the output of the CMVAE encoder $q_{\phi}$ to predict UAV velocities. To this end, a probabilistic control policy network is added at the output of the perception encoder $q_{\phi}$, forming the UAV navigation stack. The probabilistic control policy network $\pi_{w}(\Upsilon \mid \mathbf{z})$ predicts the mean and the variance for each velocity command given a perception representation $z$ from the encoder $q_{\phi}$, i.e., \(\Upsilon \sim \mathcal{N}\big(\mu_{w}(z), \sigma^{2}_{w}(z)\big)\), where \(\Upsilon_{\mu} = \{\mu_{\dot{x}}, \mu_{\dot{y}}, \mu_{\dot{z}}, \mu_{\dot{\psi}}\}\) and \(\Upsilon_{\sigma^{2}} = \{\sigma^{2}_{\dot{x}}, \sigma^{2}_{\dot{y}}, \sigma^{2}_{\dot{z}}, \sigma^{2}_{\dot{\psi}}\}\). For training the probabilistic control policy, we use imitation learning with a dedicated control dataset $\mathcal{D}_c$, and the heteroscedastic loss function

Following the work by

Autonomous Navigation Overview

After training the components of the minimalistic navigation architecture, we obtain autonomous navigation flight that drives the UAV through the red gates as shown in Figure 6.

Quantifying Uncertainty in the DNN-based Navigation System

Uncertainty From Perception Representations

Although the CMVAE encoder \(q_{\phi}\) employs Bayesian inference to obtain latent vectors \(\mathbf{z}\), CMVAE does not capture epistemic uncertainty since the encoder lacks a distribution over parameters $\phi$. To capture uncertainty in the perception encoder, we follow prior work from

To approximate Equation \eqref{eq:postEncoder} we take a set \({\Phi = \{\phi_{m}\}^{M}_{m}}\) of encoder parameter samples \(\phi_{m} \sim p(\Phi \mid \mathcal{D}_{p})\), to obtain a set of latent samples \(\{z_{m}\}^{M}_{m=1}\) from the output of the encoder \(q_{\Phi}(\mathbf{z} \mid \mathbf{x}, \mathcal{D}_{p})\). In practice, we modify the CMVAE by adding a dropout layer in the encoder. Then, we use Monte Carlo Dropout (MCD)

Handling Input Uncertainty In The Control Policy

In BDL, downstream uncertainty propagation assumes that a neural network component is able to handle or admit uncertainty at the input. In our case, this implies that the neural network for control is able to handle the uncertainty coming from the perception component. To do that we consider using the BNN with LV inputs (BNN+LV)

The integrals from the equations above are intractable, and we rely on approximations to obtain an estimation of the predictive distribution. The posterior \(p(\mathbf{w} \mid \mathcal{D}_{c})\) is difficult to evaluate. Thus, we can approximate the inner integral using an ensemble of neural networks

The inner integral is approximated by taking a set of samples from the perception component latent space. Latent representation samples are drawn using the encoder mean and variance \(\mathbf{z} \sim \mathcal{N}(\mu_{\phi},\sigma^{2}_{\phi})\). For brevity, we directly use the samples obtained in the perception component \(\{z_{m}\}^{M}_{m} \sim q_{\Phi}(\mathbf{z} \mid \mathbf{x}, \mathcal{D}_{p})\) to take into account the epistemic uncertainty from the previous stage. Finally, the predictions we get from passing each latent vector $\mathbf{z}$ through each ensemble member are used to estimate the posterior predictive distribution in Equation \eqref{eq:postPredDist}. The predictive distribution \(p(\Upsilon^{*} \mid \mathbf{x}^{*}, \mathcal{D}_{c},\mathcal{D}_{p})\) from Equation \eqref{eq:post_pred_dist_whole_system}, takes into account the uncertainty from both system components.

From the control policy perspective, using multiple latent samples $\mathbf{z}$ can be seen as taking a better “picture” of the latent space (perception representation) to gather more information about the environment. Interestingly, we can also make a connection between our sampling approach and the works

Finally, to control the UAV, we use the deep ensemble expected value of the predicted velocities, as suggested in the literature

Navigation Performance Evaluation

The goal of the UAV is to navigate through a set of gates with unknown locations, forming a circular track. In AirSim, a track is entirely defined by a set of gates, their poses in the space, and the agent navigation direction. For perception-based navigation, the complexity of a track resides in the “gate-visibility” difficulty

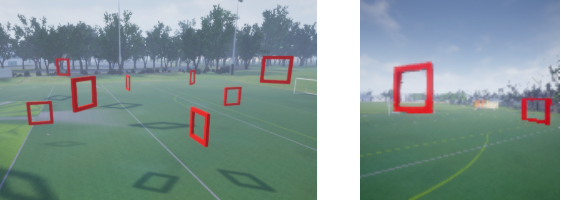

We evaluate the navigation system using a circular track with eight equally spaced gates positioned initially at a radius of 8 m and constant height, as shown in Figure 8. A natural way to increase track complexity is by adding a random displacement to the position of each gate in the track, i.e., introducing operational domain shift (a factor that influences model predictive uncertainty). A track without random displacement in the gates has a circular fashion. Gate position randomness alters the shape of the track, affecting the gate visibility, as presented in Figure 9, and therefore, the generation of shifted images is more likely to happen, e.g., gates are “not visible, partially visible, or multiple gates” can be captured in the UAV FoV.

To assess the system performance and robustness to perturbations in the environment, we generate new tracks adding an offset to each gate radius and height with random noise. We specify the Gate Radius Noise (GRN) and the Gate Height Noise (GHN) with two levels of track noise, as follows:

\[\begin{align*} \text{Noise level 1} \begin{cases} GRN \sim \mathcal{U}[-1.0, 1.0)\\ GHN \sim \mathcal{U}[0, 2.0) \end{cases} & \;\;\;\;\; \text{Noise level 2} \begin{cases} GRN \sim \mathcal{U}[-1.5, 1.5)\\ GHN \sim \mathcal{U}[0, 3.0) \end{cases} \end{align*}\]In this regard, with this experimental setup we seek to answer the following research question:

RQ-1: Can we improve the UAV performance and robustness to perturbations of the environment by using an uncertianty-aware DL-based navigation architecture?

Navigation Models Setup

Navigation Models Trainind Datasets. We use two independent datasets for each component in the navigation pipeline. The perception CMVAE uses a dataset ($\mathcal{D}_p$) of 300k images where a gate is visible and gate-pose annotations are available. The control component uses a dataset ($\mathcal{D}_c$) of 17k images with UAV velocity annotations. $\mathcal{D}_c$ is collected by flying the UAV in a circular track with gates, using traditional methods for trajectory planning and control. The perception dataset is divided into 80\% for training and the remaining 20\% for validation and testing. The control dataset uses a split of 90\% for training and the remaining for validation and testing. In both cases, the image size is 64x64 pixels.

Navigation Models Baselines. Ideally, we would expect the UAV to have a more robust and stable navigation performance with a full uncertainty-aware navigation architecture and by using the expected value of the predicted velocities

| Navigation Model | Perception Encoder | LRS | Control Policy | CPS |

|---|

In Table 1, models $\mathcal{M}_1$ to $\mathcal{M}_3$ partially capture uncertainty in the pipeline since they use a deterministic perception component (CMVAE). For the control component, $\mathcal{M}_1$ and $\mathcal{M}_2$ take 32 and 1 LRS, respectively, and use the samples later with an ensemble of 5 probabilistic control policies capturing epistemic and aleatoric uncertainty. $\mathcal{M}_3$ uses 32 LRS, and the control component is completely deterministic. Finally, $\mathcal{M}_4$ represents our Bayesian navigation pipeline where the perception component captures epistemic uncertainty using MCD with 32 forward passes for each input to get 32 latent representation predictions. To ease the computation, perception predictions are directly used as latent variable samples in downstream control. The control component uses an ensemble of 5 probabilistic control policies, obtaining 160 control prediction samples.

Results

Table 2, shows the UAV navigation models performance. Moreover, the videos below we observe a qualitative performance comparison of each model in the UAV navigation task.

| Navigation Model | Gates Passed @ Track Noise Level 1 | Gates Passed @ Track Noise Level 2 |

|---|

Based on the results of the experiments, we can answer RQ-1 saying that the uncertainty-aware DL-based navigation architecture marginally improves the UAV performance and robustness to perturbations of the environment. But the surprising point here is that the performance from the full Bayesian navigation architecture $\mathcal{M}_4$ is slightly better than partial uncertianty-aware archictecture $\mathcal{M}_2$. This situation allow us to question the benefit of the full Bayesian navigation architecture, and more precisely, the tradeoff between performance & robustness benefit vs. the required computational resources, since the simple partial uncertainty-aware $\mathcal{M}_2$ provides similar performance.

Naturally, based on these observations, our next question is:

RQ-2: What is the reason for this behavior and performance in the full uncertainty-aware (Bayesian) architecture $\mathcal{M}_4$ compared to other partial uncertainty-aware navigation architectures?

Leveraging System Uncertainty for Better Navigation Performance

Understanding the System Components’ Predictive Uncertainty

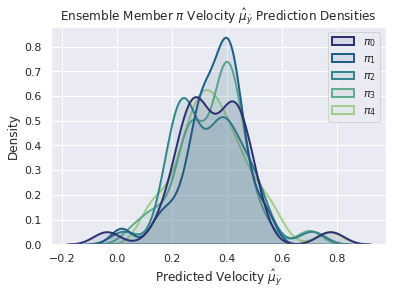

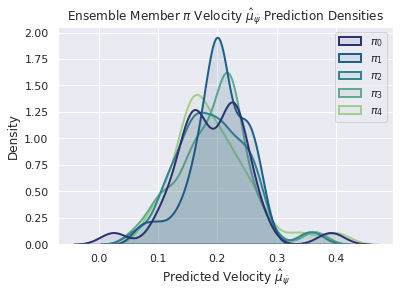

To try to understand what is going on during the UAV autonomous mission/task execution, lets probe the predictions of each component of the fully Bayesian navigation architecture, when facing one of the situations that arises when adding noise to the tracks as shown in Figure 8. In particular, consider the double-gate case from Figure 9 and its generated predictions in Figure 10 below:

This controlled experiment reveals that the introduced ambiguity (two gates) in the input image for the UAV DNN-based navigation architecture, is also reflected in the predicted control actions. The predicted control commands that will move the UAV towards one of the two gates, i.e., $\hat{\dot{y}}$ (lateral vel. left or right) and $\hat{\dot{\psi}}$ (yaw rotation cw and ccw), present multimodal distributions fo reach ensemble member in the control component. This situation suggest the posibility of two likely values for the control command, which is a clear indication of the uncertainty in the control command.

Conclusion

TBD

For more in depth info about this topic, please check the papers

If you found this useful, please cite this as:

Arnez Yagualca, Fabio Alejandro (Nov 2025). Quantifying and Using Uncertainty in Deep Learning-based UAV Navigation. Fabio Arnez - Website. https://FabioArnez.github.io.

or as a BibTeX entry:

@article{arnez yagualca2025quantifying-and-using-uncertainty-in-deep-learning-based-uav-navigation,

title = {Quantifying and Using Uncertainty in Deep Learning-based UAV Navigation},

author = {Arnez Yagualca, Fabio Alejandro},journal = {Fabio Arnez - Website},

year = {2025},

month = {Nov},

url = {https://FabioArnez.github.io/blog/2025/UQ-BDL-UAV-System/}

}

Enjoy Reading This Article?

Here are some more articles you might like to read next: